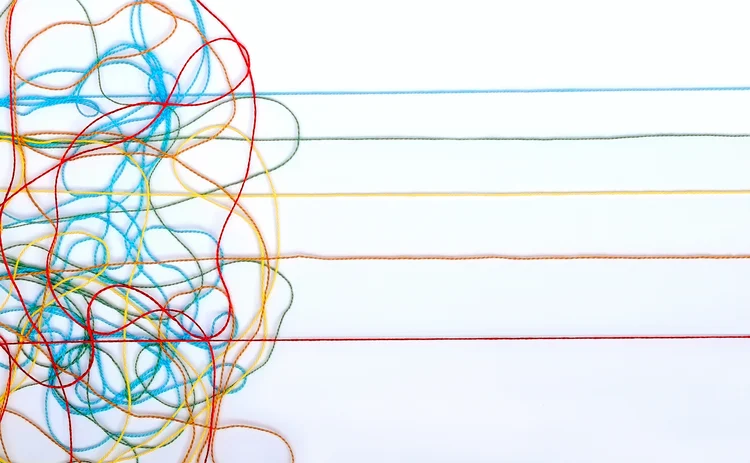

Quants see promise in DeBerta’s untangled reading

Improved language models are able to grasp context better

Context, as they say, is everything – which is a big problem for investors when they try to use so-called large language models to weigh the sentiment of financial news. The models are notorious for misreading terms that could be either good or bad depending on what’s being talked about at the time.

A few methods have been tried to solve the problem, mostly using the idea that models can look at

Only users who have a paid subscription or are part of a corporate subscription are able to print or copy content.

To access these options, along with all other subscription benefits, please contact info@risk.net or view our subscription options here: http://subscriptions.risk.net/subscribe

You are currently unable to print this content. Please contact info@risk.net to find out more.

You are currently unable to copy this content. Please contact info@risk.net to find out more.

Copyright Infopro Digital Limited. All rights reserved.

As outlined in our terms and conditions, https://www.infopro-digital.com/terms-and-conditions/subscriptions/ (point 2.4), printing is limited to a single copy.

If you would like to purchase additional rights please email info@risk.net

Copyright Infopro Digital Limited. All rights reserved.

You may share this content using our article tools. As outlined in our terms and conditions, https://www.infopro-digital.com/terms-and-conditions/subscriptions/ (clause 2.4), an Authorised User may only make one copy of the materials for their own personal use. You must also comply with the restrictions in clause 2.5.

If you would like to purchase additional rights please email info@risk.net

More on Our take

Why a Trumpian world could be good for trend

Trump’s U-turns have hit returns, but the forces that put him in office could revive the investment strategy

Roll over, SRTs: Regulators fret over capital relief trades

Banks will have to balance the appeal of capital relief against the risk of a market shutdown

Thrown under the Omnibus: will GAR survive EU’s green rollback?

Green finance metric in limbo after suspension sees 90% of top EU banks forgo reporting

Has the Collins Amendment reached its endgame?

Scott Bessent wants to end the dual capital stack. How that would work in practice remains unclear

Talking Heads 2025: Who will buy Trump’s big, beautiful bonds?

Treasury issuance and hedge fund risks vex macro heavyweights

The AI explainability barrier is lowering

Improved and accessible tools can quickly make sense of complex models

Do BIS volumes soar past the trend?

FX market ADV has surged to $9.6 trillion in the latest triennial survey, but are these figures representative?

DFAST monoculture is its own test

Drop in frequency and scope of stress test disclosures makes it hard to monitor bank mimicry of Fed models